Website backups are something I have always been quite paranoid about but, despite providers such as Digital Ocean (get $10 on a new account through my referral) already giving you automated server snapshot backups, I had never to date found a solution that gave me peace of mind. The best configuration I had come to is to rsync server snapshot archives across all my servers. Since at least some of them are on different datacenters, it gives me the impression that the chances of a catastrophe hitting all of those at the same time are, at least... lower. Of course, having different machines connected in such manner makes the whole network much more vulnerable.

While setting up some other API from the Google's Cloud Console I found out about their Nearline Storage service, launched past July. Uploaded archives are accessible within up to 3 seconds, either through a web interface or command line, and storage pricing is $0.01 per GB per month.

This looks great but how easy is it to use? Having integrated it on my workflow in a breeze, I definitely recommend it. Below I will leave an overview on how to quickly get started.

Setting Up Nearline

The first requirements are setting up your Google Cloud account and billing details. I won't be covering these steps but Google often gives away trial coupons such as free $300 credit to be used within 60 days, so watch out for those in your way if you are creating a new account.

Then create a New Project. Give it a reasonable Name and ID, and pick the App Engine closest to your operations. After being redirected to your Project's Dashboard, hit the "Get Started" link under Cloud Storage, and create your first bucket selecting the Nearline storage class.

At this point we're done with the web interface. Let's now head to the machine we want to backup - I assume we are connecting to a debian based VPS through SSH. We will be installing the Google Cloud toolset, which includes gsutil, the program used to upload archives into Nearline. Do not jump into sudo apt-get gsutil. That will not work for you.

# Create an environment variable for the correct distribution

export CLOUD_SDK_REPO="cloud-sdk-$(lsb_release -c -s)"

# Add the Cloud SDK distribution URI as a package source

echo "deb http://packages.cloud.google.com/apt $CLOUD_SDK_REPO main" | sudo tee /etc/apt/sources.list.d/google-cloud-sdk.list

# Import the Google Cloud public key

curl https://packages.cloud.google.com/apt/doc/apt-key.gpg | sudo apt-key add -

# Update and install the Cloud SDK

sudo apt-get update && sudo apt-get install google-cloud-sdk

# Run gcloud init to get started

gcloud init

Here you'll be prompted to complete the OAuth setup by pasting the token. One of the great things about gsutil is that it includes an implementation of rsync out of the box. To make it swifter to go through file checksum comparison however, it is recommend to install crcmod as seen below. This step is optional but will greatly improve performance.

sudo apt-get install gcc python-dev python-setuptools

sudo easy_install -U pip

sudo pip uninstall crcmod

sudo pip install -U crcmod

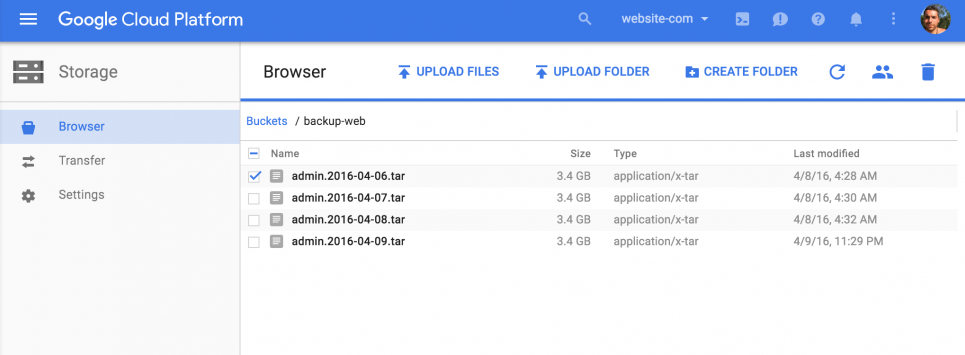

We're done with setup. Now, to sync one of your directories with the bucket we previously created, run the following command and confirm the new files are being created on Google Cloud Console web interface.

gsutil rsync -R -c /backup/ gs://backup-website/ # recursive and using checksums

For the complete reference over gsutil rsync head to the documentation page. Below I'll be showing you how exactly I set up things on my end.

Automating Backups

So far, I already had a script creating daily tar archives of all data directories on my server, which I won't be covering here. Hence, I assume you have a /backup/ directory on your server with files such as backup-2016-04-08.tar, backup-2016-04-09.tar, etc. Our goals here are:

- have the /backup/ directory sync into Nearline, once a day

- remove backups older than X days from Nearline

Cron

To achieve daily automation we simply have to create a cron job. The following two commands will create and configure one for you:

echo "gsutil rsync -R -c /backup/ gs://backup-website/" > /etc/cron.daily/gcs-nearline-sync.sh

chmod +x /etc/cron.daily/gcs-nearline-sync.sh

Removing Old Backups

Google Cloud gives you a nice range of settings to configure your storage buckets through Object Lifecycle Management. One of these allows you to set a maximum age for files, past which they are automatically removed. To set this rule on your bucket, create a JSON file (/bucket-config.json) on your server containing the rule definition, such as:

{

"lifecycle": {

"rule":

[

{

"action": {"type": "Delete"},

"condition": {"age": 365}

}

]

}

}

Now submit it to Google Cloud using the command:

gsutil lifecycle set /bucket-config.json gs://backup-website

Done, all set, peace of mind. The one thing I would add though is an email notification in case the cron script returns with non-successful code.

Published by António Andrade on Sunday, 10th of April 2016. Opinions and views expressed in this article are my own and do not reflect those of my employers or their clients.

Cover image by Martin Jernberg.

Are you looking for help with a related project? Get in touch.

Replies

Posted by Chris Alavoine on 01/05/17 4:47pm

Thanks for this post, most useful. I am getting an error on the last stage when trying to set the json file using:

gsutil lifecycle set /bucket-config.json gs://blah-blah

I get the following:

Traceback (most recent call last):

File "/usr/lib/google-cloud-sdk/platform/gsutil/gsutil", line 22, in

gsutil.RunMain()

File "/usr/lib/google-cloud-sdk/platform/gsutil/gsutil.py", line 113, in RunMain

sys.exit(gslib.__main__.main())

File "/usr/lib/google-cloud-sdk/platform/gsutil/gslib/__main__.py", line 383, in main

perf_trace_token=perf_trace_token)

File "/usr/lib/google-cloud-sdk/platform/gsutil/gslib/__main__.py", line 577, in _RunNamedCommandAndHandleExceptions

collect_analytics=True)

File "/usr/lib/google-cloud-sdk/platform/gsutil/gslib/command_runner.py", line 299, in RunNamedCommand

return_code = command_inst.RunCommand()

File "/usr/lib/google-cloud-sdk/platform/gsutil/gslib/commands/lifecycle.py", line 197, in RunCommand

return self._SetLifecycleConfig()

File "/usr/lib/google-cloud-sdk/platform/gsutil/gslib/commands/lifecycle.py", line 147, in _SetLifecycleConfig

lifecycle_file = open(lifecycle_arg, 'r')

IOError: [Errno 2] No such file or directory: u'/bucket-config.json'

Have you seen this before? I've tried running the command from various different machines with the same result.

Thanks,

Chris.

Post Reply